What are Technical SEO Audits?

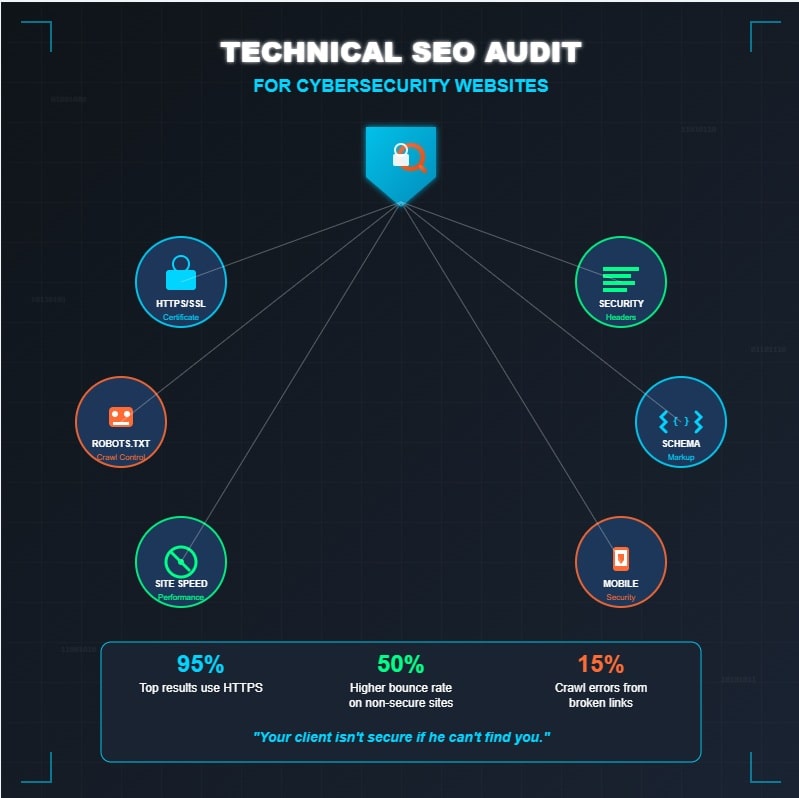

Technical SEO audits increasingly intersect with cybersecurity protocols, creating a complex landscape where optimization meets protective measures. Organizations conducting these audits must evaluate not only traditional ranking factors but also security implementations that affect site performance, user trust, and search visibility. Through systematic examination of SSL certificates, security headers, and access controls, businesses can identify vulnerabilities that potentially compromise both security posture and search rankings. What remains unclear for many practitioners, however, is how to balance rigorous security implementations with the crawlability requirements essential for peak indexing.

Key Takeaways

- Verify SSL/TLS certificate implementation and ensure proper HTTPS deployment across all website pages.

- Analyze robots.txt file to prevent search engines from accessing sensitive areas like admin sections.

- Implement and audit security headers including HSTS, X-Content-Type-Options, and Content-Security-Policy.

- Check for proper schema markup implementation that follows encryption standards and access control measures.

- Use Google Search Console to identify unexpectedly indexed URLs that may indicate security vulnerabilities.

The Intersection of Technical SEO and Website Security

While technical SEO traditionally focuses on optimizing websites for search engine visibility, its intricate relationship with cybersecurity has become increasingly significant in determining online success.

The convergence of these disciplines reveals compelling evidence: 95% of Google’s top search results utilize HTTPS encryption, while non-secure websites experience 50% higher bounce rates, directly impacting user engagement metrics. Additionally, mobile users have a bounce rate of 56.8%, emphasizing the need for secure and optimized web experiences across all devices.

Through thorough vulnerability assessment and security analytics implementation, organizations can simultaneously fortify their defensive posture and enhance search performance, as robust security measures address crawlability issues and broken links—which account for 15% of crawl errors—while establishing the credibility necessary for competitive advantage in an increasingly security-conscious digital landscape. This connection becomes even more critical considering that 75% of HTTP sites are now labeled “not secure” by browsers like Chrome, potentially driving away security-conscious visitors.

Essential Security Protocols for SEO-Friendly Websites

Implementing robust security protocols constitutes the foundational architecture of SEO-friendly websites, creating a symbiotic relationship between user trust and search engine visibility that directly impacts organic performance.

HTTPS implementation, accompanied by properly configured SSL/TLS certificates, forms the cornerstone of this security framework, while strategic deployment of security headers—including HSTS, X-XSS-Protection, and X-Content-Type-Options—provides critical defense against prevalent cyber threats.

Furthermore, thorough data encryption practices, supported by regular security training for all stakeholders who manage website operations, facilitate compliance with increasingly stringent privacy regulations while simultaneously strengthening the site’s technical SEO profile. Integrating a reliable Web Application Firewall significantly enhances protection against malicious traffic patterns while maintaining optimal website performance.

Website Crawlability and Security Barriers Assessment

A thorough assessment of website crawlability within cybersecurity frameworks necessitates thorough robot.txt file analysis, which establishes critical parameters for search engine access while maintaining sensitive data protection. Additionally, Google’s ranking algorithm uses over 200 factors, highlighting the importance of optimization in conjunction with security measures.

Encryption protocol evaluation, including proper HTTPS implementation and SSL certificate verification, serves as the foundation for balancing security requirements against crawler accessibility, thereby preventing inadvertent blocking of legitimate bot traffic. Regular sitemap security checks, incorporating both structural validation and permission configurations, guarantee that while protective measures remain intact, search engines maintain peak visibility of authorized content, ultimately supporting both security objectives and search performance metrics. Identifying and resolving any duplicate content issues can significantly improve crawlability while reducing security vulnerabilities created by redundant data exposure.

Robot.txt File Analysis

The robots.txt file serves as a critical gateway between a website and the numerous crawlers that attempt to index its content, functioning simultaneously as both an SEO tool and a potential security vulnerability point that requires meticulous analysis during technical audits.

When examining crawler behavior, security professionals must recognize that robots.txt implications extend beyond mere crawling instructions, potentially revealing sensitive directories or resources to malicious actors who, unlike legitimate crawlers, may deliberately target disallowed areas.

For extensive protection, organizations should:

- Implement proper authentication mechanisms

- Regularly review disallow directives

- Verify crawlability of public content

- Test security beyond robots.txt restrictions

It’s essential to understand that relying on robots.txt as a security measure is fundamentally flawed since it represents an information exposure risk classified under CWE-200 when used improperly.

Encryption Protocol Evaluation

Encryption protocol evaluation represents the cornerstone of any thorough technical SEO audit for cybersecurity, where the intersection of search engine accessibility and robust security measures demands meticulous analysis beyond surface-level configurations.

When examining protocols, organizations must prioritize implementations that balance security with crawlability, particularly focusing on SSL/TLS configurations that support both modern encryption algorithms and search engine requirements.

Effective key management practices, including regular rotation and proper storage, constitute essential elements of protocol assessment, especially when considering that outdated encryption methodologies can greatly impact both security posture and search ranking performance simultaneously. Implementing format-preserving encryption can help maintain data usability while ensuring sensitive information remains protected during crawling operations.

This requires careful documentation through platforms like Qualys SSL Labs.

Sitemap Security Checks

Thorough sitemap security assessments form the critical foundation where website discoverability meets robust protection mechanisms, requiring meticulous attention to both structural elements and potential vulnerability vectors that could compromise site integrity.

When evaluating sitemap accessibility, practitioners must guarantee XML files conform to protocol standards while residing in root directories protected by appropriate access controls. This, when coupled with HTTPS implementation, markedly enhances transmission security through encrypted data pathways. Regular monitoring of indexed pages through Google Search Console can help identify unauthorized content exposure or indexing anomalies that might indicate security breaches.

Additionally, sitemap validation processes should incorporate extensive checks for canonical URLs, proper UTF-8 encoding, and absence of tracking parameters that might expose sensitive configuration details to malicious actors scanning for exploitable entry points.

Identifying and Resolving Security-Related Indexing Issues

Numerous security vulnerabilities lurk within poorly managed website indexing practices, creating significant exposure points that cybercriminals actively exploit to compromise sensitive data and system integrity.

Organizations must systematically identify and remediate indexing vulnerabilities through thorough technical audits, which reveal URL misconfigurations and improper crawling permissions that frequently lead to inadvertent exposure of sensitive content.

- Implement restrictive robots.txt directives to prevent search engines from accessing admin sections

- Deploy noindex meta tags on login pages, user profiles, and internal resources

- Regularly audit Google Search Console for unexpected indexed URLs revealing security breaches

- Remove sensitive directories from sitemaps to minimize data exposure risks

- Configure proper X-Robots-Tag headers at the server level for thorough crawl control

The absence of a properly configured SSL certificate creates an immediate security vulnerability that not only triggers browser warnings but significantly increases the risk of data interception during transmission.

SSL Certificate Implementation and Monitoring for Search Rankings

Beyond proper indexing practices, SSL certificate implementation represents a foundational security measure with significant implications for both cybersecurity posture and search engine visibility.

While Google acknowledges HTTPS as a ranking signal, albeit minor compared to content quality and relevance, the SSL Certificate Benefits extend beyond rankings to encompass user trust and data protection, which indirectly influence engagement metrics.

Organizations must establish robust Certificate Renewal Strategies, including automated monitoring systems and calendar-based alerts, considering that certificate expiration can trigger browser warnings that dramatically increase bounce rates. This potential increase in bounce rates can damage both user experience and search visibility. Recent analysis shows that less than 10% of websites have implemented HTTPS flawlessly, highlighting the critical need for technical expertise during implementation.

Security Headers Optimization for Technical SEO Performance

Implementing robust security headers constitutes a critical, yet often overlooked component of technical SEO strategy, particularly for organizations operating in high-risk sectors where data protection remains paramount.

Through thorough implementation of security headers, including HSTS, X-Content-Type-Options, and Content-Security-Policy, websites can fortify protection against common vulnerabilities while simultaneously fostering user trust, which indirectly bolsters search performance metrics.

- HSTS implementation forces HTTPS connections, preventing protocol downgrade attacks

- X-Content-Type-Options with “nosniff” value prevents MIME-type confusion exploits

- X-XSS-Protection blocks cross-site scripting attempts in compatible browsers

- Content-Security-Policy restricts resource execution to trusted domains

Security headers optimization requires strategic implementation with regular auditing for maximum efficacy. Regular inspection using tools like Chrome DevTools allows SEO professionals to verify header configurations and identify potential security vulnerabilities.

Site Speed Enhancement While Maintaining Security Measures

Security measures, while essential for website protection, often introduce scripts and plugins that can greatly impact load times, requiring a strategic balance between protection and performance.

Technical SEO practitioners must evaluate each security plugin’s necessity, removing redundant tools that duplicate functionality, while consolidating essential security functions into lightweight, efficient implementations.

Through careful configuration of security scripts, including proper asynchronous loading and strategic placement in the document flow, organizations can maintain robust protection without sacrificing the speed metrics that influence both search rankings and user experience.

Implementing optimal security solutions prevents potentially damaging cyber-attacks that can slow down websites and compromise sensitive customer information.

Minimize Plugin Usage

Every WordPress plugin added to a cybersecurity website creates potential vulnerabilities while simultaneously increasing load times. This necessitates a strategic approach to plugin management that balances functionality against performance impacts.

When evaluating plugin efficiency, organizations must implement a disciplined review process, considering both security implications and performance metrics. This ensures only essential plugins remain active within the website architecture. Since approximately 43% of cyber attacks target small businesses, including cybersecurity firms, minimizing plugin usage becomes even more critical for reducing potential entry points for hackers.

- Conduct regular plugin audits to identify and remove redundant or underperforming installations

- Prioritize multi-functional plugins that reduce overall count while maintaining capabilities

- Configure lazy loading mechanisms to defer non-critical plugin activation until needed

- Implement thorough performance monitoring to quantify each plugin’s impact

- Select only plugins with active development cycles and regular security updates

Balance Security Scripts

Balancing security scripts with performance demands constitutes a critical challenge for cybersecurity websites, where each additional protective measure potentially introduces latency that compromises user experience and search engine rankings.

Implementing asynchronous loading for non-essential security scripts, while strategically employing CDN integration, enables organizations to optimize script performance without sacrificing protective capabilities.

Furthermore, regular performance audits, which identify security trade-offs between protection and speed, should inform an evidence-based approach to script selection.

Through techniques such as lazy loading and browser caching, coupled with thorough dependency management, security professionals can maintain robust defenses while minimizing the 500-1500ms overhead typically introduced by third-party security tools.

Utilizing proper loading attributes like async and defer can prevent security scripts from blocking critical HTML parsing while still maintaining their protective functions.

Mobile Security Considerations in Technical SEO Audits

As mobile devices become increasingly central to users’ digital interactions, implementing robust security measures specifically tailored to mobile environments constitutes a critical component of extensive technical SEO audits for cybersecurity professionals.

When conducting assessments, security specialists must evaluate mobile vulnerabilities while ensuring search performance isn’t compromised by protective implementations that might inadvertently block crawlers or slow page loading. Google considers HTTPS a ranking signal and prioritizes secure websites in search results, making security implementation essential for both protection and visibility.

- Implement robust user data protection mechanisms without hindering mobile responsiveness

- Regularly schedule security updates for mobile-specific elements during low-traffic periods

- Deploy anti-phishing prevention measures that don’t trigger false positives with legitimate mobile redirects

- Utilize secure coding practices that maintain compatibility with mobile-first indexing requirements

- Establish extensive malware prevention systems that minimize impact on mobile page speed

Structured Data Implementation With Security Best Practices

Implementing structured data for cybersecurity purposes requires balancing rich search engine visibility with stringent security protocols, particularly when encoding sensitive information through schema markup.

Organizations must consider implementing security-focused schemas that communicate threat protection capabilities while ensuring their JSON-LD implementation follows encryption standards and access control measures that prevent exposure of backend systems. Effective data security policies can provide direction for users on how to handle the structured data properly.

Technical validation of structured data markup through tools like Google’s Rich Results Test and Schema.org’s Validator should be conducted regularly, as malformed or incorrect schema implementations can create security vulnerabilities that sophisticated threat actors might exploit.

Schema For Threat Protection

While most organizations recognize schema markup primarily as an SEO tool, the strategic implementation of structured data carries significant cybersecurity implications that extend far beyond search visibility.

Through careful schema implementation, which structures website data in standardized formats that facilitate machine interpretation, organizations can enhance their threat protection posture by improving how security systems identify and categorize potential vulnerabilities.

- Schema benefits include enhanced threat detection through improved data interpretation

- Structured data provides uniform implementation patterns that reduce security-relevant coding errors

- Schema markup facilitates more effective crawling, potentially exposing hidden vulnerabilities

- Adherence to schema standards supports broader compliance with security frameworks

- Advanced security platforms leverage structured data for more precise threat identification

Modern SEO strategies now emphasize this integration as secure user experience has become a critical ranking factor that Google evaluates alongside traditional optimization elements.

Secure JSON-LD Implementation

The secure implementation of JSON-LD structured data represents a critical intersection between technical SEO optimization and robust cybersecurity practices, necessitating meticulous attention to both architectural integrity and protective measures throughout deployment.

Organizations must prioritize the utilization of secure data types within their structured data, ensuring native JSON formats that minimize exploitation vectors while maximizing machine readability. Implementing a properly defined @context element transforms standard JSON documents into self-describing, interoperable data structures that resist semantic ambiguity.

When implementing JSON-LD, professionals should establish robust json-ld caching protocols to enhance performance while reducing server load, thereby mitigating potential denial-of-service vulnerabilities.

In addition, adherence to W3C guidelines for JSON-LD 1.1, coupled with regular security audits, forms the cornerstone of an extensive security posture that safeguards structured data implementations against emerging threats.

Error-Free Markup Validation

Error-free markup validation stands as a foundational pillar in the secure implementation of structured data, requiring meticulous attention to both technical accuracy and security considerations throughout the validation process.

Organizations implementing schema markup must, through systematic error monitoring and thorough schema validation protocols, establish rigorous quality control mechanisms that prevent potential vulnerabilities while ensuring optimal search engine visibility.

Essential validation measures include:

- Implementing automated validation tools alongside manual review processes

- Establishing quarterly schema audit schedules with documented remediation procedures

- Integrating security checks within the validation workflow

- Validating data types and required fields against current schema.org standards

- Monitoring schema performance metrics to identify potential implementation anomalies

Using clean HTML code provides search engines with enhanced understanding of your content structure while simultaneously reducing potential security vulnerabilities in your markup.

Creating an Actionable Security-Focused SEO Improvement Plan

Developing an extensive, security-focused SEO improvement plan requires methodical integration of cybersecurity principles with technical optimization strategies, thereby creating a robust framework that simultaneously enhances visibility while fortifying digital assets against emerging threats.

Organizations must establish security-focused metrics, including vulnerability assessment scores and encryption implementation rates, which, when analyzed against industry benchmarks, provide quantifiable indicators of progress.

Moreover, implementing thorough risk assessment strategies, encompassing threat modeling and attack surface analysis, enables prioritization of remediation efforts according to potential business impact rather than merely technical severity. Incorporating multi-factor authentication into website administration areas significantly strengthens your digital security posture against unauthorized access attempts.

Conduct a Technical SEO Audit Today!

Conducting an extensive technical SEO audit with cybersecurity as a focal point enables organizations to establish a robust digital foundation that simultaneously satisfies search engine algorithms and protects sensitive assets. Through systematic implementation of security protocols, proper crawl management, and vigilant monitoring of technical vulnerabilities, businesses can achieve the dual objective of enhanced visibility and fortified defense mechanisms, thereby securing competitive advantage while safeguarding their digital ecosystem against evolving threats.

Frequently Asked Questions

How Do Security Plugins Affect Crawl Budget Allocation?

Security plugins can impact crawl budget by adding overhead, creating redirects, or affecting server response times. Evaluating plugin effectiveness helps guarantee security measures don’t compromise crawling efficiency or resource allocation.

Can Security-Related URL Parameters Impact Keyword Rankings?

Security parameters in URLs can dilute keyword rankings by creating duplicate content issues, splitting link equity, and wasting crawl budget—requiring canonical tags and parameter configurations for ideal search visibility.

Do WAF Implementations Negatively Influence Organic Search Visibility?

Properly configured WAF implementations typically enhance rather than diminish organic visibility. WAF performance can positively impact SEO implications by preventing downtime, improving site security, and maintaining ideal page load speeds.

How Often Should Robots.Txt Be Updated for Security Purposes?

Effective robots.txt management requires quarterly updates at minimum, with immediate revisions following structural website changes or security incidents. This cadence maintains ideal security compliance while adapting to emerging threat landscapes.

What Security Vulnerabilities Can Arise From Improper Redirect Implementations?

Improper redirects create vulnerabilities through redirect loops that frustrate users and phishing threats where attackers exploit trusted domains to redirect visitors toward malicious endpoints designed to harvest credentials or install malware.